Hybrid Intelligence Platform

Build powerful, safe AI with the Hybrid Intelligence Platform - a complete neuro-symbolic development environment with robust, API-first workflows.

The platform provides all the tools you need to train, inspect, deploy, and inference explainable, adaptive decision models.

Most of the practices and concepts will feel familiar. Inference returns fully explained and justified results, so there are some new and unique components to explore.

Hybrid Intelligence gives you full control over how models are structured, guided, and interpreted. Hybrid Intelligence integrates structured symbolic hierarchies alongside learned models, enabling transparent reasoning aligned with domain ontologies and causal graphs.

Explore Hybrid Intelligence and Neuro-Symbolic AI.

Data Annotation and Enhancement

Hybrid Intelligence lets you shape how the model understands your data before Induction (training) begins.

Use tags, groupings, constraints, and metadata to embed domain knowledge, define semantic structure and guide reasoning.

Explore how these inputs guide how the model forms its reasoning, ensuring the model reflects your knowledge and the context of your domain.

-

Human Readability & Interpretability

Friendly Names: Add human-readable labels at varying levels of verbosity. These labels are carried through to model logic, insights, and explanation layers.

Units and Dimensions: Define units (e.g. days, dollars) and dimensions (e.g. time, volume) to improve interpretability and support dimensional reasoning.

Semantic Types / Ontology Links: Map features to standard taxonomies or domain ontologies to support semantic consistency, improve integration, and enhance reasoning quality.

Structural Groupings & Relationships

Feature Groups: Organise features into logical groupings to support structured modelling and explanation across related variables.

Feature Value Groups: Cluster specific values within a feature (e.g. product categories, risk tiers) to enable abstraction and simplify reasoning.

High Cardinality Treatments: Apply compression, grouping, or encoding strategies to manage and simplify features with large, sparse value sets and to improve generalization.

Temporal Relevance: Mark features with time sensitivity or decay characteristics (e.g. last updated, expiry) to support behavioural reasoning and time-based partitioning.

Causal Tags: Annotate features with causal roles (e.g. predictor, mediator, outcome) to support causal inference and scenario simulation.

Governance, Fairness & Compliance

Protected Features: Flag attributes such as age, gender, ethnicity, or other legally or ethically sensitive variables. The platform uses this tagging to enable fairness checks, bias mitigation strategies, and compliance with regulatory frameworks (e.g. GDPR, EEOC, EU AI Act).

Policy Constraints: Define limits, thresholds, or guardrails that reflect business rules or regulatory requirements. These can be carried into model logic and validation.

Data Provenance and Traceability: Track the source and lineage of each feature to support traceability and compliance. The platform supports full traceability of model versions, experiment lineage, and deployment artifacts, enabling robust governance and audit workflows.

Operational Behaviour & Defaults

Handling Missing and Rare Values: Set rules or preferences for imputing, excluding, or flagging missing values during training and inference. Set default handling for unseen or rare values during inference.

Feature Types and Roles: Specify whether features represent identifiers, flags, continuous measures, temporal data, or reference variables. This helps the platform apply appropriate transformations and reasoning patterns.

Data Handling & Configuration

The Hybrid Intelligence platform gives you direct control over how data is interpreted, structured, and prepared for induction.

Configuration options across data typing, sampling and validation shape how models learn, form logic, and stay aligned with real-world context.

Explore the detail to see how early design choices in Hybrid Intelligence shape stability, adaptability, and operational integrity.

-

Column Typing

Define how each column is interpreted and processed throughout the modelling workflow.

Determine how data is encoded, whether a feature contributes to reasoning, and how it is treated in structure and training.

Ensures numeric, categorical, and boolean values are handled appropriately, and non-informative fields are excluded.

Directly influence how features are incorporated into decision logic, affecting how models structure rules and interpret inputs.

Infrequent Value Grouping

Combines low-frequency categorical values into a shared fallback category.

Sets the minimum number of occurrences a value must have to be treated as its own category.

Allows values below this threshold to be grouped under “Other,” reducing complexity and improving generalisation during model induction.

Supports cleaner logic formation by avoiding overfitting to rare cases

Focuses structural reasoning on patterns with real signal.

Ignored columns

Determines which columns are excluded from model training but retained in outputs for reference and traceability

Supports identification, reconciliation, and audit use cases without affecting predictions.

Histogram sampling

Builds summaries of column distributions using a representative subset of the data.

Captures skew, spread, and rare categories, producing bin-based summaries for numeric features and frequency counts for categorical ones.

Support data quality checks

Help interpret feature behaviour

Inform how features are split and ordered when constructing the model’s reasoning structure.

Guide the creation of models where structure reflects meaningful variation in the data - not just statistical signal.

Anomaly detection

Highlights unusual patterns or values within each feature that may indicate data quality issues or structural outliers.

Identifies values that fall outside expected ranges or distributions, based on frequency, variance, or deviation from typical patterns.

Helps surface data drift, encoding errors, or edge cases early in the workflow, before they impact model training.

Ensures that rare or extreme values are examined intentionally, informing decisions about inclusion, grouping, or exclusion within a structured reasoning model.

Unseen Category Handling

Ensures the model can respond sensibly to inputs it has not encountered during training.

Allows handling of new or evolving categorical values in real-world data avoiding brittleness or undefined model behaviour.

Explicitly treats these unknowns as a distinct category, preserving structural integrity and preventing silent failures.

This allows Hybrid Intelligence models to generalise reliably while maintaining traceability and control, even in the face of shifting data.

Missing Value Imputation

Ensures that gaps in the data do not undermine model structure or lead to silent data loss.

Avoids discarding incomplete rows by inherently applying a consistent treatment

Allows the model to remain stable and interpretable even when inputs are partially missing.

Preserves the integrity of onboarding

Reduces unnecessary data loss

Ensures that missingness itself can be surfaced and reasoned about within Hybrid Intelligence models.

Imbalanced Dataset Handling

Ensures that model performance is not skewed by dominant class patterns.

Avoids models learning to ignore rare but important cases.

Rebalances class representation to preserves signal from underrepresented outcomes.

Creates space for distinct reasoning paths to emerge across classes

Enables fairer, more structured decision models that don’t collapse around statistical dominance.

Shard Configuration

Controls how the dataset is divided for processing and model induction.

Enables parallelisation but also influences how patterns are distributed and captured across the data.

Can affect the formation of logical partitions

Ensures that structure is learned efficiently without fragmenting signal or losing continuity.

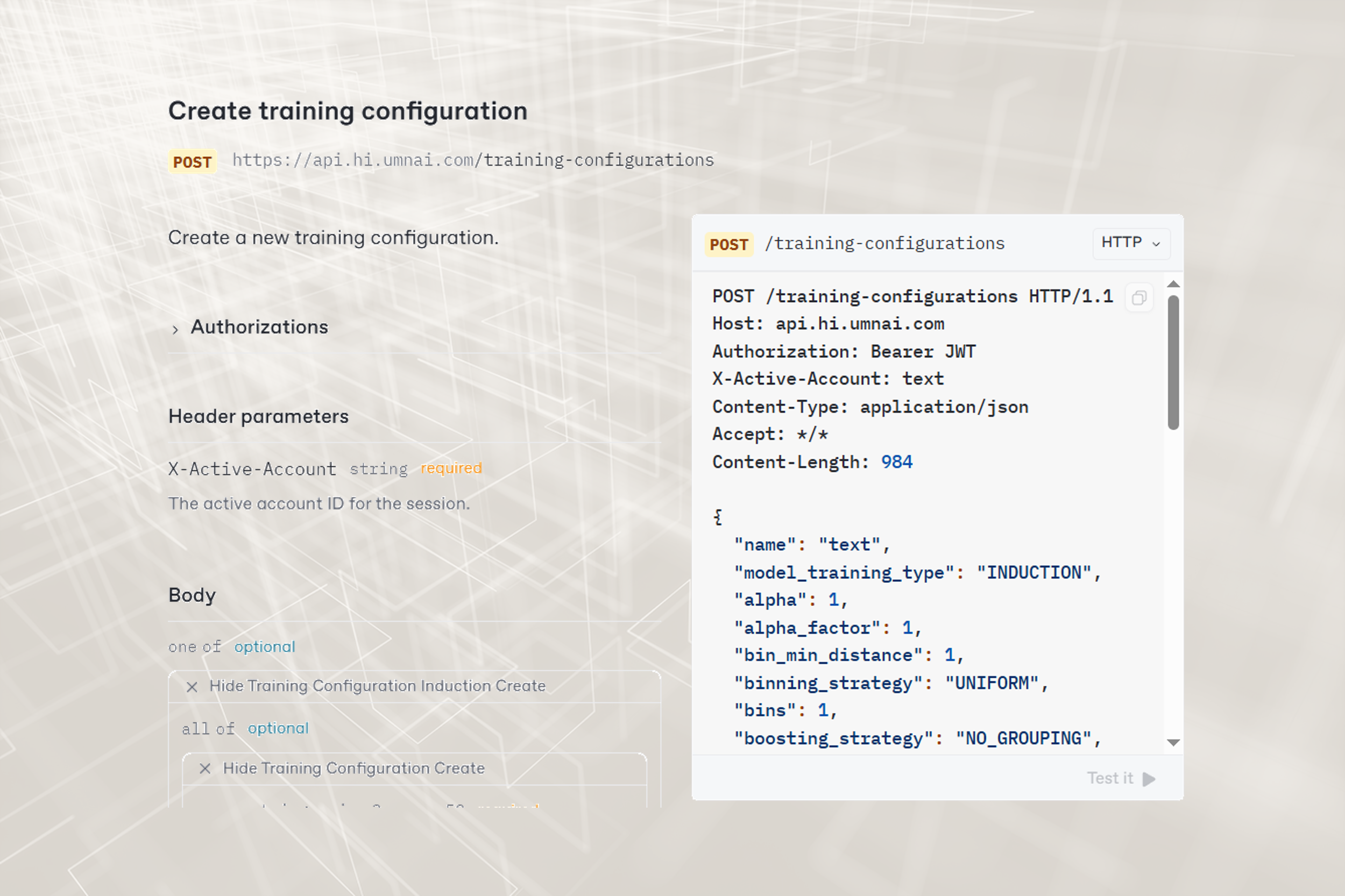

Induction

The Hybrid Intelligence Platform gives you fine-grained control over how models are built and trained. Induction is the Hybrid Intelligence training method, combining deep learning with symbolic learning.

Unlike conventional AI, structural controls let you shape how models form - prioritizing business goals, interpretability, and operational alignment.

Explore how training configuration allows you to guide what the model learns and how it reasons, ensuring models remain transparent, disciplined, and aligned with real-world decision-making.

-

Model Structure & Complexity

These define the architectural boundaries of the model (how deep, wide, or sparse its structure can become).

Guide the formation of logic modules.

Control expressive power.

Enables the prioritisation of interpretability, sparsity, and structural discipline.

Partitioning & Logic Formation parameters

Shape how the platform detects and represents meaningful variation in the data.

Influence how thresholds are learned, features are segmented, and partitions evolve during induction

Support logic fidelity, robust structure, and data-grounded reasoning throughout the model.

Training Dynamics & Iterative Learning settings

Govern how training unfolds across rounds and modules.

Manages learning rates, iteration pacing, and stopping conditions,

Allow models to evolve gradually while maintaining alignment with performance targets.

Support training stability, adaptivity, and structural convergence.

Regularisation & Optimisation settings

Calibrate how the model balances performance and generalisation.

Fine-tune how complexity is introduced, feature selection is enforced, and redundancy is controlled

Enable the platform to favour parsimonious structure and resilient performance with full explainability.

Evaluation, Validation & Model Selection parameters

Define how the platform allocates data for training and validation, and how it determines when to adapt or halt.

Ensure models are evaluated fairly and reliably, using scalable and rigorous validation regimes.

Supports trustworthy evaluation, adaptive complexity, and measured progression.

Ensembles & Aggregation parameters

Control how the platform generates, trims, and combines multiple estimators.

Supports modular learning while constraining noise and redundancy

Enables the synthesis of diverse reasoning paths into a coherent and robust model with controlled variance and high interpretability.

Reasoning Modes and Capabilities

Determine which foundational logic types the model supports

Includes causal reasoning, estimator-based logic, or both.

Define the model’s expressive capacity ensuring alignment with the intended analytical or operational role.

Support for structured planning via a Goal–Plan–Action framework, enabling decision models that go beyond prediction to deliver verifiable sequences of action under clear goals and constraints.

Views

Hybrid Intelligence gives you full visibility into how your models reason, predict, and behave. Views exposes every logic path, feature interaction, and prediction influence in clear, traceable terms.

They articulate model reasoning and decision quality in real time.

Explore how views explain and validate models and decisions ensuring full oversight, control supporting trust and compliance.

-

Model Structure View

Gives a complete breakdown of the trained model’s internal architecture

Shows every module, partition, rule, and bin

Defines how decisions are made.

Exposes the logic paths the model uses, the features that drive them, and the structure those features take.

Is the foundation for inspecting how the model reasons, understanding what influences its outputs, and tracing each part of the decision structure back to its components.

Essential for auditability, validation, and model governance.

Results View

Returns the model’s prediction for a given input

Returns all data needed to explain how that prediction was made.

Includes

the original query

the predicted output,

detailed attribution data showing for features, modules, and partitions

The foundation for traceable inference, allowing developers and downstream systems to connect every prediction to a transparent decision path.

Feature Attribution View

Shows how much each input feature contributed to a specific prediction.

Provides a breakdown of influence across all features

Understand the decision in simple, measurable terms.

Applied to batches of inputs, it also supports feature importance analysis across cohorts and datasets

Identify which variables consistently drive outcomes.

Module Attribution View

Breaks down a prediction in terms of the modules and logic paths that generated it.

Connects each contribution to specific expressions, partitions, and feature conditions

Reveals how the model’s structured reasoning flows through its internal components.

Makes it possible to trace how the influences on a decision were constructed and applied.

Decision Action Table View

Breaks down a prediction by model partition

Shows how each rule and expression contributed to the result.

Includes detailed attribution statistics, bin definitions, and activation counts for every condition the model evaluated.

Audit model decisions by inspecting partition-level contributions, rule activations, and attribution statistics

Ideal for deployment validation, behavioural tracing, and regulatory audits.

Designed for programmatic consumption

Especially valuable for comparing query behaviour to training baselines

Powerful tool for audit, monitoring, and deployment validation.

Module Dependency View

Provides a detailed picture of how a specific module behaves

Shows how each input feature/interaction influences the predictions within that module.

Connects each output to the specific rules, conditions, and feature values that produced it

Revealing how changes in input affect attribution.

Useful for understanding model sensitivity

Used to explore decision logic in context

Used to validate that feature interactions behave as intended.

Anomaly View

Surfaces statistical imbalances and coverage gaps in the feature space by analysing the bins used during modelling.

Highlights regions of the data that may lack representation, such as sparse bins, overly wide intervals, or high-density outliers

Enables teams to identify where predictions may be less stable or where more data may be needed.

Supports proactive model validation and targeted data collection, especially in edge cases or underrepresented populations.

Strengths and Weaknesses (SAW) View

Provides an estimate of how strong, uncertain, and potentially inaccurate a prediction is, based on actual outcomes.

Compares predictions with known results

Quantifies how confident the model is and where its weaknesses lie.

Helps assess reliability at the individual prediction level

Supports better downstream decisions and risk management.